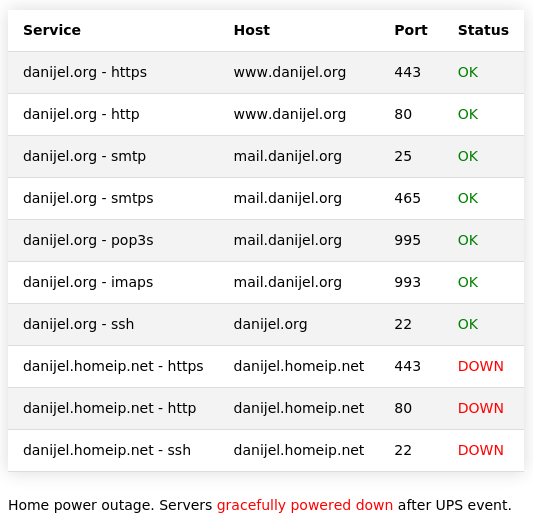

I’ve been doing some infrastructure work on the servers since yesterday, essentially creating a “traffic light” for reporting online status of services, as well as the infrastructure for simultaneous graceful shutdown of servers at home, attached to the UPS.

This is what it looks like on the danijel.org site when the home copy is down due to a simulated power outage (unplugging the UPS from the grid). When I power it up, it takes 10-15min. for all the services to refresh and get back online. It’s not instantaneous, because I had to make compromises between that and wasting resources on crontab processes that run too frequently for normal daily needs. Essentially, on powerup the servers are up within half a minute, the ADSL router takes a few minutes to get online, and then every ten minutes the dynamic DNS IP is refreshed, which is the key functionality to make the local server visible on the Internet. Then it’s another five minutes for the danijel.org server to refresh the diagnostic data and report the updated status. Detection of a power outage is also not instantaneous; in case of a power loss, the UPS will wait five minutes for power to come back, and then send a broadcast. Within two minutes everything will be powered down, and then within five minutes the online server will refresh the status. Basically, it’s around 15min as well.

Do I have some particular emergency in mind? Not really. It’s just that electricity where I live is less than reliable, and every now and then there’s a power failure that used to force me to shut the servers down manually to protect the SSD drives from a potentially fatal sudden power loss during a write. Only one machine can be connected to the UPS via USB, and that one automatically shuts down, while the others are in a pickle. So, I eventually got around to configuring everything to run automatically when I sleep, and while I was at it, I wrote a monitoring system for the website. It was showing all kinds of fake outages during the testing phase – no, I wasn’t having some kind of a massive failure – but I’m happy with how it runs now so I’ll consider it done. The monitoring system is partially for me when I’m not home, so I can see that the power is down, and partially to let you know if I’m having a power outage that inhibits communication.

The danijel.homeip.net website is a copy of the main site that’s being updated hourly. It’s designed so that I can stop the hourly updates in an emergency and it instantly becomes the main website, where both I and the forum members can post. Essentially, it’s a BBS hosted at my home with a purpose of maintaining communications in case the main site dies. Since I can’t imagine many scenarios where the main site dies and the ddns service keeps working, it’s probably a silly idea, but I like having backups to the point where my backups have backups.

Also, I am under all sorts of pressure which makes it impossible for me to do anything really sophisticated, so I might at least keep my UNIX/coding skills sharp. 🙂